Open Source is often a hot topic. AI, funding models, retention, and gatekeeping regularly pop up on Hacker News. I rarely see articles discussing how security affects open source software (OSS), however, outside novel research. So today, I wanted to cover how the Common Vulnerabilities and Exposures (CVE) system is not fit for open-source software.

Most maintainers I know build software for fun or with purpose. They generally aren’t looking to commercialise it. The CVE system works under the assumption that parties are in a commercial relationship, and that has unintended consequences for the open-source community.

Maintainers are caught combating misinformation or dealing with unrealistic expectations and abuse. They do not have the resourcing or bandwidth to triage, let alone resolve, security bugs being raised. It is a source of friction, and when a hobby becomes a job, people start to treat it that way by making their creations closed source or abandoning the ecosystem entirely. These are bad outcomes for the software industry and, perversely, worse for security too.

To address this, we need to cut demand for raising CVEs, educate security professionals about the nuances between OSS and commercial relationships, and make better decisions by using the right metrics. Hopefully, then, we will start to see a healthier and more productive relationship between researchers and maintainers.

Cutting Demand

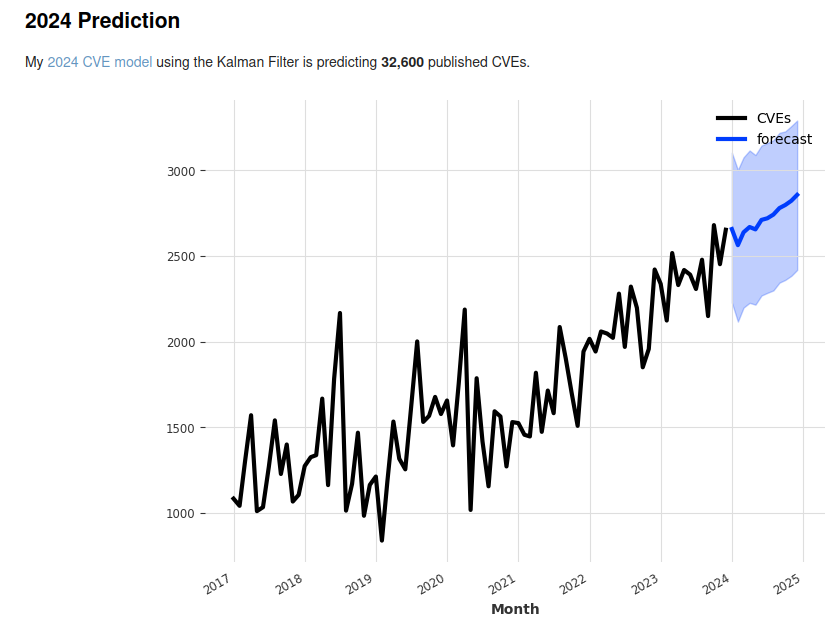

Jerry Gamblin publishes statistics on CVEs raised. The number of reported CVEs has steadily increased over time, with significant bumps in recent years. In 2018, there were 16,510 records, while in 2023, we had 28,831 CVEs. While the default CVSS scores have remained static, the number of reports is accelerating.

Each CVE has been reported by a human. While other factors contribute to the growth trajectory, it is that human element that matters. Charlie Munger often stated, “Show me the incentive, and I’ll show you the outcome.” What incentives exist to raise CVEs?

The story of ‘Cyber’ is one where it rewards technical nuance, commands professional respect, and is an exponentially growing market. The reality is that people just want to be protected from bad actors and to feel safe, and there will always be a hard limit on how much people will pay for that. Jobseekers buy into the ‘Cyber’ narrative and see it as a fast track to getting rich, and we need to change that. Given that the accepted certifications are arduous and that shallow content and toy projects are not valued by hiring managers, people seek other pathways to get an interview. Raising CVEs, unfortunately, helps with that.

Jobseekers scan open-source projects for bugs with the intention of raising a CVE record to pad their resume. The intention is that the reporter works with maintainers to resolve meaningful security flaws, but the outcome is ghosting and fatigued maintainers. This may well be considered “within the rules” by cyber aspirants, who may not understand the broader impact of their actions. Artificial intelligence will only accelerate this trend further and make it even more difficult for maintainers to distinguish between genuine issues and artificial reports. Maintainers care about keeping their software secure, let’s not make it harder.

What can we do? As a hiring manager, you should discourage the use of CVEs as a quality that helps inform your hiring decisions. There are other methods for ascertaining technical chops, and other valuable qualities to look for around communication, curiosity, resilience, and business acumen.

As professionals, we should change the technical narrative to be about helping protect people. Not inspire people to learn and be quizzed on IT quality nuances. Most importantly, if we really want to cut demand, we need aspirants to internalise that there are no shortcuts.

Verification

The next challenge to address is verification. In the past, CVEs were raised by researchers who felt the burden of proof was on them. In today’s world, we cannot rely on professional integrity alone. The next two options are the CVE Numbering Authority (CNA) and the maintainer themselves.

At the time of writing (Jan 29, 2024), over 80 CVE reports have been accepted each day by MITRE. The quantity of submitted reports is significantly higher, and we also know that content moderation is a difficult challenge. Even heavily resourced technology firms cannot resolve the issue at scale. CVEs are technical and require expertise to verify. In addition, CNAs have a moral constraint. Longer verification processes will result in larger exposure windows and less time for vendors or maintainers to respond effectively.

So, we as an industry have now pushed the verification effort onto OSS maintainers. Maintainers have context and expertise, but there is an opportunity cost. A maintainer who is trying to comprehend a fake report or prove an issue is real is a maintainer who isn’t building new features or patching genuine issues. Here’s one example.

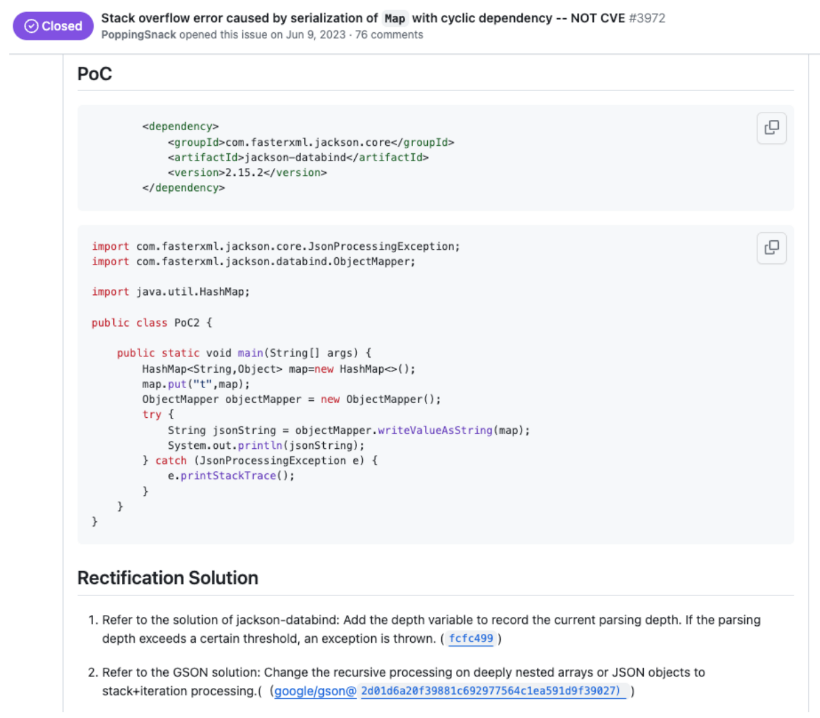

Jackson Databind is a library that helps engineers transmit data. It is used by almost all Java middleware applications on earth and maintained by one guy.

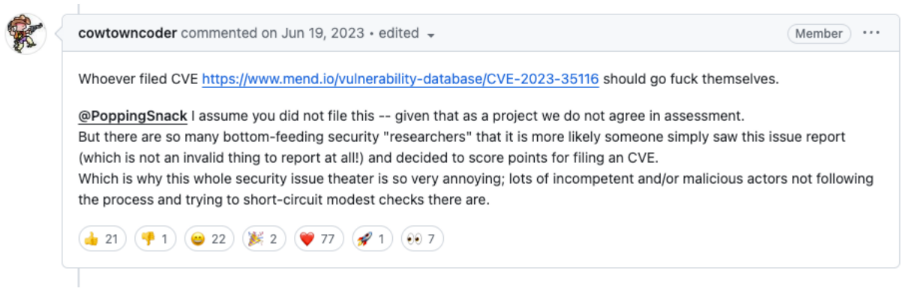

Recently, a CVE was raised against the library.

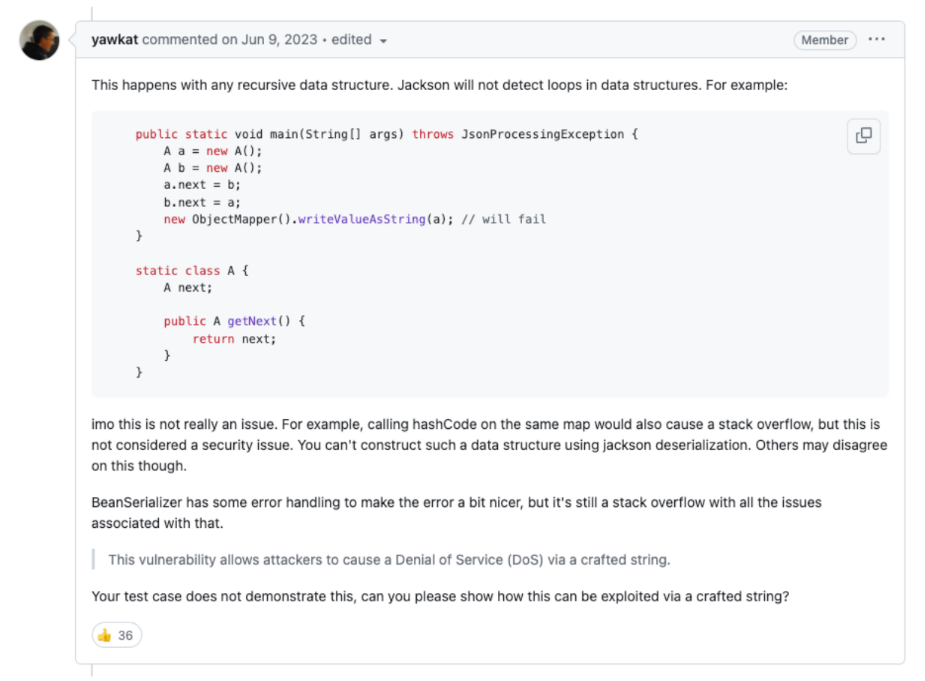

The maintainer, after reviewing the issue, found that the submitter had not provided an actual proof of concept and instead stated a ‘crafted string’, which can be used to create a denial of service (DoS). This is common security language, especially for timeboxed pen testing exercises where a weakness is identified but cannot be exploited without significant time investment.

The maintainers explained that you cannot construct a data structure using deserialisation. The submitter immediately submitted the GitHub issue as a CVE Report.

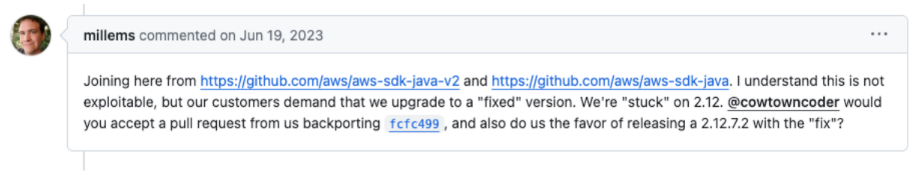

The thread continues with various information security professionals showing outrage and frustration that the maintainers are not fixing the CVE and that they are breaching their SLAs. Amusingly enough, AWS itself states that customers are demanding fixes:

Another clear example can be seen from the cURL maintainers’ response, where they assumed good intent from security bug reporters but couldn’t find a good solution to the misinformation. That article is available here.

Should maintainers be in charge of verifying the validity of reports? I think the onus should be on the submitter and the CNA personally.

Response

The last part I wanted to talk about is how we use the CVE system. CVEs were constructed while OSS was nascent and vendors were accountable for responding to security issues. When working with a software vendor, there is a clear contract that defines SLAs, communication points, scope, commercial terms and clear legal accountability. When working with open source, they generally license their software as “free to use as-is”. There is no relationship, there are no commercial terms. They don’t have SLAs or any real obligation to listen to you. This creates tension for people where their performance is measured by vulnerability counts, remediation timelines, and being able to articulate progress to leadership.

When those factors are outside your control and you are at the mercy of a developer with an anime catgirl as their avatar, it can be a frustrating experience. When a package achieves widespread adoption, the maintainers start to receive stern demands, legal threats, blackmail, and worse. There are other considerations around build reproducibility, accessibility, backwards compatibility, performance, and whether the feature is fun to work on. So, as professionals, why are we treating volunteers and hobbyists this way?

It comes back to how we measure success in our industry, and CVEs are a large part of that. Metrics help govern and measure the effectiveness of a security program, inform investment decisions, and guide management actions. Selecting quality metrics is its own post, but I commonly encounter CVE Quantity, CVE Severity, and CVE Time to Remediation being selected. They are selected because they are easy to measure.

“God grant me the serenity to accept the things that I cannot change, the courage to change the things I can, and the wisdom to know the difference.”

People say that vulnerability management is intractable. There is never enough funding and business units don’t prioritise it. This leads to resentment, a firefighting culture, and occasional meltdowns. But the core issue is that they cannot demonstrate meaningful change from investment in vulnerability management because the CVE system is outside of their control. You cannot change the number of incoming CVEs, their severity, or how long it’ll take you to patch.

There is a cottage industry based on CVE counts and dashboards. This is a security theatre. We need to select better metrics and stop rewarding companies for perpetuating poor ones.

Summary

I hope I’ve been able to help shine a light on something that’s been bugging me for a while. There are, of course, additional things to consider, but ultimately, I see the trajectory that we are on and I want to firstly call it out and, secondly take action. So please, if you had to take away four things from this post:

- Remember that maintainers are not commercial entities, they are volunteers providing software as-is. If we start making OSS a job, then many maintainers will leave and our lives will be poorer for it.

- Stop basing hiring decisions around the most easily gameable component of the system, that being individual CVE record lists on a CV or in a casual message. When we treat CVE and CEH in the same breath, then we will start to see job seekers change their behaviour when seeking roles.

- Look at how you use CVE metrics internally and ask whether you have any real influence over them. If not, seek for different ones that you DO have influence over.

- Chill! It’s easy to get worked up, but we need to all be on this journey together.

Thank you and until next time.

Cole Cornford